ExUI is a simple, lightweight browser-based UI for running local inference using ExLlamaV2. It provides a friendly, responsive, and minimalistic user interface with features such as persistent sessions, multiple instruct formats, speculative decoding, and support for various model types including EXL2, GPTQ, and FP16. To use ExUI, users can first clone the repository and install the required dependencies. Then, they can run the web server using the included server.py script, which will automatically open the application in their browser. The application stores its configuration and sessions in the ~/exui directory by default.

ExUI also provides prebuilt wheels for ExLlamaV2, and users are recommended to install the latest version of Flash Attention for optimal performance. In addition to running ExUI locally, users can also access an example Colab notebook that demonstrates how to use the application in a Google Colab environment.

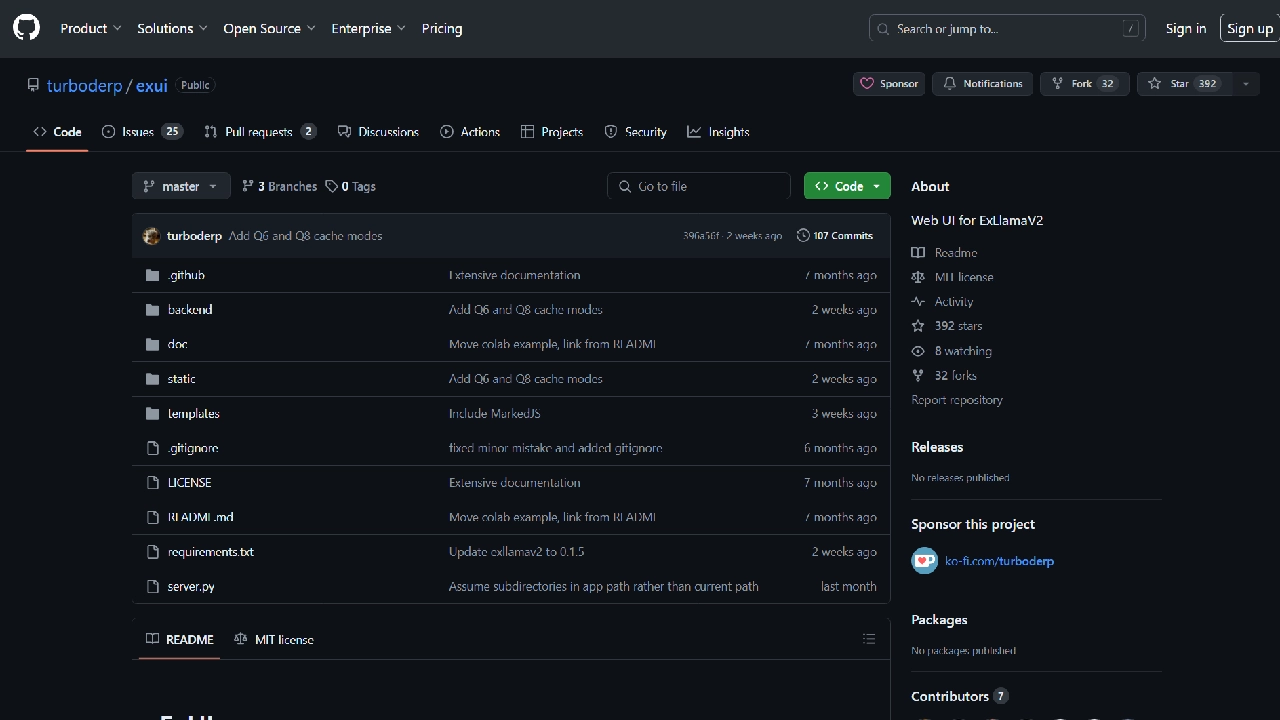

More detailed installation instructions can be found in the project’s documentation. The developers of ExUI have indicated that there are more features and improvements to come, so users should stay tuned for future updates.

Overall, ExUI is a convenient and user-friendly tool for running local inference using ExLlamaV2, providing a range of features and options to enhance the experience of working with large language models on consumer-grade hardware.