Alibaba’s Qwen team released Qwen3-Coder-Flash, their latest coding model variant that promises “lightning-fast, accurate code generation” with some impressive technical specifications. However, the real question developers are asking is whether this new model can truly handle enterprise-level coding challenges or if it’s just another incremental improvement.

What Makes Qwen3-Coder-Flash Different

Understanding Qwen3-Coder-Flash requires examining its architecture and positioning within Alibaba’s expanding model ecosystem. This model features 30.5B total parameters with 3.3B active at any one time, utilizing a Mixture-of-Experts architecture that enables it to run efficiently on 64GB Mac systems or even 32GB systems when quantized.

The naming convention reveals strategic positioning. While the broader Qwen3-Coder family includes massive variants like the 480B parameter model, Qwen3-Coder-Flash specifically targets developers who need fast, efficient code generation without requiring enormous computational resources. This approach makes advanced AI coding accessible to individual developers and smaller teams.

Furthermore, the “Flash” designation emphasizes speed optimization. The model is designed as a “non-thinking model that is specially trained for coding tasks”, meaning it focuses on rapid code generation rather than complex reasoning processes that might slow down development workflows.

Technical Architecture Deep Analysis

The Mixture-of-Experts (MoE) architecture represents a significant technical advancement in how coding models operate. Unlike traditional dense models that activate all parameters for every computation, Qwen3-Coder-Flash selectively activates only the most relevant expert networks for specific coding tasks. This selective activation dramatically reduces computational overhead while maintaining high performance levels.

Additionally, the model incorporates several architectural innovations that distinguish it from competitors. The parameter distribution enables specialized expert networks to handle different programming languages and coding paradigms more effectively. Python code generation might activate different expert combinations compared to JavaScript or C++ development tasks.

The training methodology also emphasizes practical coding scenarios. The model leveraged Qwen2.5-Coder to clean and rewrite noisy data, significantly improving overall performance through advanced synthetic data generation techniques. This approach ensures the model understands real-world coding patterns rather than just academic programming examples.

https://huggingface.co/Qwen/Qwen3-Coder-30B-A3B-Instruct

Context Length Capabilities Transform Development Workflows

One of Qwen3-Coder-Flash’s most significant advantages lies in its context handling capabilities. The model provides native 256K context support with extension capabilities up to 1M tokens using YaRN (Yet another RoPE extensioN) technology. This extended context window fundamentally changes how developers can interact with AI coding assistants.

Traditional coding models often struggle with large codebases because they cannot maintain sufficient context about project structure, dependencies, and architectural patterns. However, Qwen3-Coder-Flash’s extended context enables it to understand entire repositories, complex inheritance hierarchies, and multi-file dependencies simultaneously.

The practical implications extend beyond simple code completion. Developers can now provide entire project specifications, architectural diagrams, and requirement documents as context, enabling the model to generate code that aligns with broader project goals rather than isolated functionality.

Platform Integration and Developer Ecosystem

Qwen3-Coder-Flash has been optimized for platforms like Qwen Code, Cline, Roo Code, and Kilo Code, indicating Alibaba’s strategic focus on ecosystem development rather than standalone model deployment. This platform-centric approach recognizes that modern development workflows require integrated toolchains rather than isolated AI capabilities.

The integration strategy extends to function calling and agent workflows. The model features a specially designed function call format that supports agentic coding across multiple platforms. This standardization enables developers to create more sophisticated automation workflows that can interact with multiple development tools and services.

Furthermore, the model’s compatibility with popular development environments reduces adoption friction. Developers can integrate Qwen3-Coder-Flash into existing workflows without significant infrastructure changes or learning new interface paradigms. This seamless integration approach contrasts with models that require specialized environments or extensive configuration processes.

The agent workflow capabilities also enable more sophisticated development automation. Teams can create AI agents that handle routine coding tasks, code review processes, and documentation generation while maintaining consistency with project standards and architectural patterns.

Performance Benchmarks and Real-World Testing

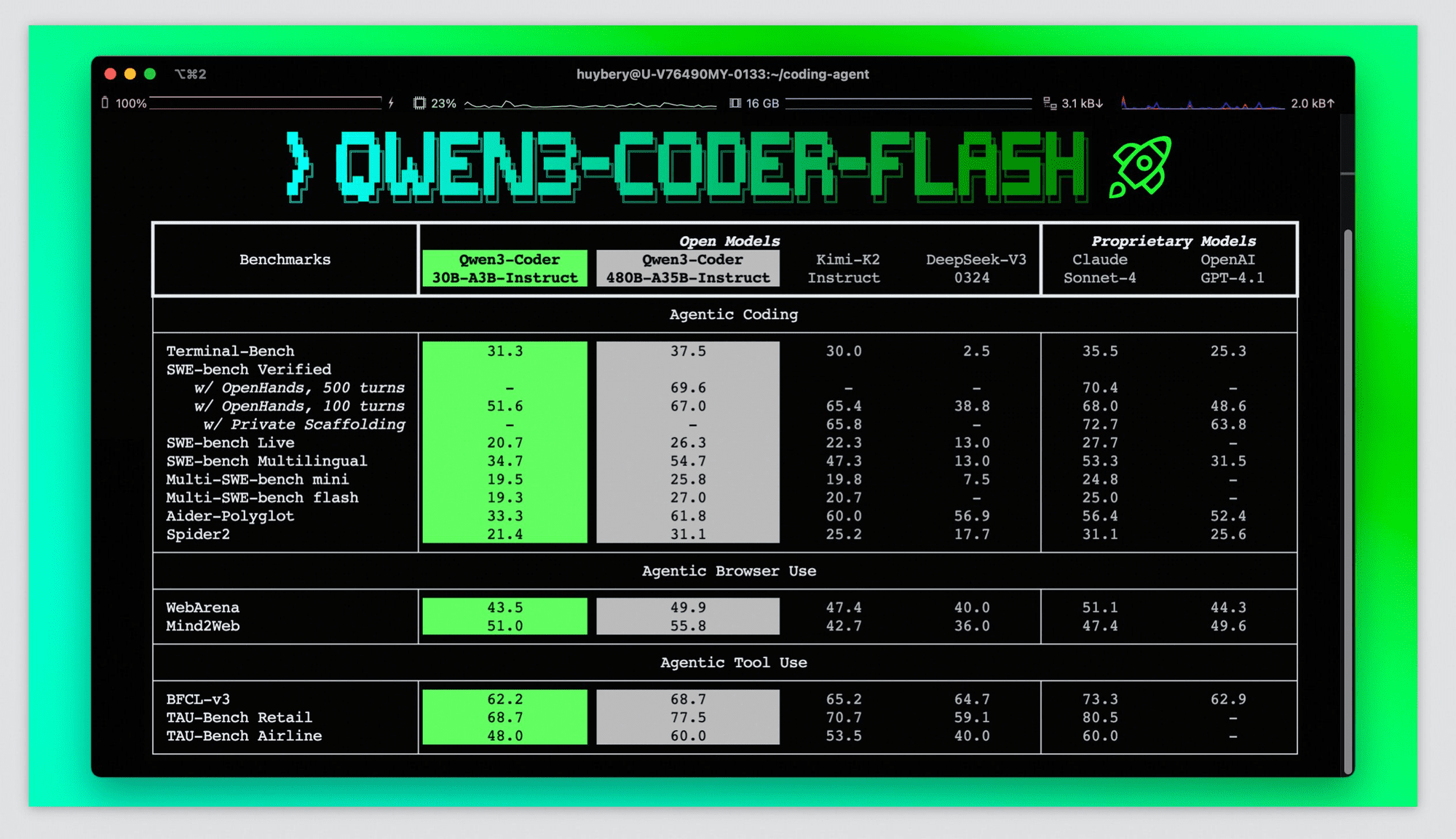

Evaluating Qwen3-Coder-Flash’s performance requires examining both synthetic benchmarks and real-world development scenarios. The broader Qwen3-Coder family achieves state-of-the-art coding performance rivaling Claude Sonnet-4, GPT-4.1, and Kimi K2, with 61.8% performance on Aider Polygot benchmarks. While specific benchmarks for the Flash variant aren’t yet available, its architectural similarities suggest comparable performance levels.

However, benchmark performance only tells part of the story. Real-world development involves complex scenarios that standard benchmarks don’t capture: debugging legacy code, integrating with poorly documented APIs, handling edge cases in production systems, and maintaining code quality across large teams.

Early developer feedback suggests Qwen3-Coder-Flash excels in rapid prototyping scenarios where speed matters more than perfect optimization. The model generates functional code quickly, enabling developers to iterate rapidly during exploration phases. However, production deployment often requires additional review and optimization that the model cannot automatically provide.

The model’s performance also varies significantly across different programming languages and frameworks. While it demonstrates strong capabilities with popular languages like Python and JavaScript, performance with specialized languages or emerging frameworks may be less consistent.