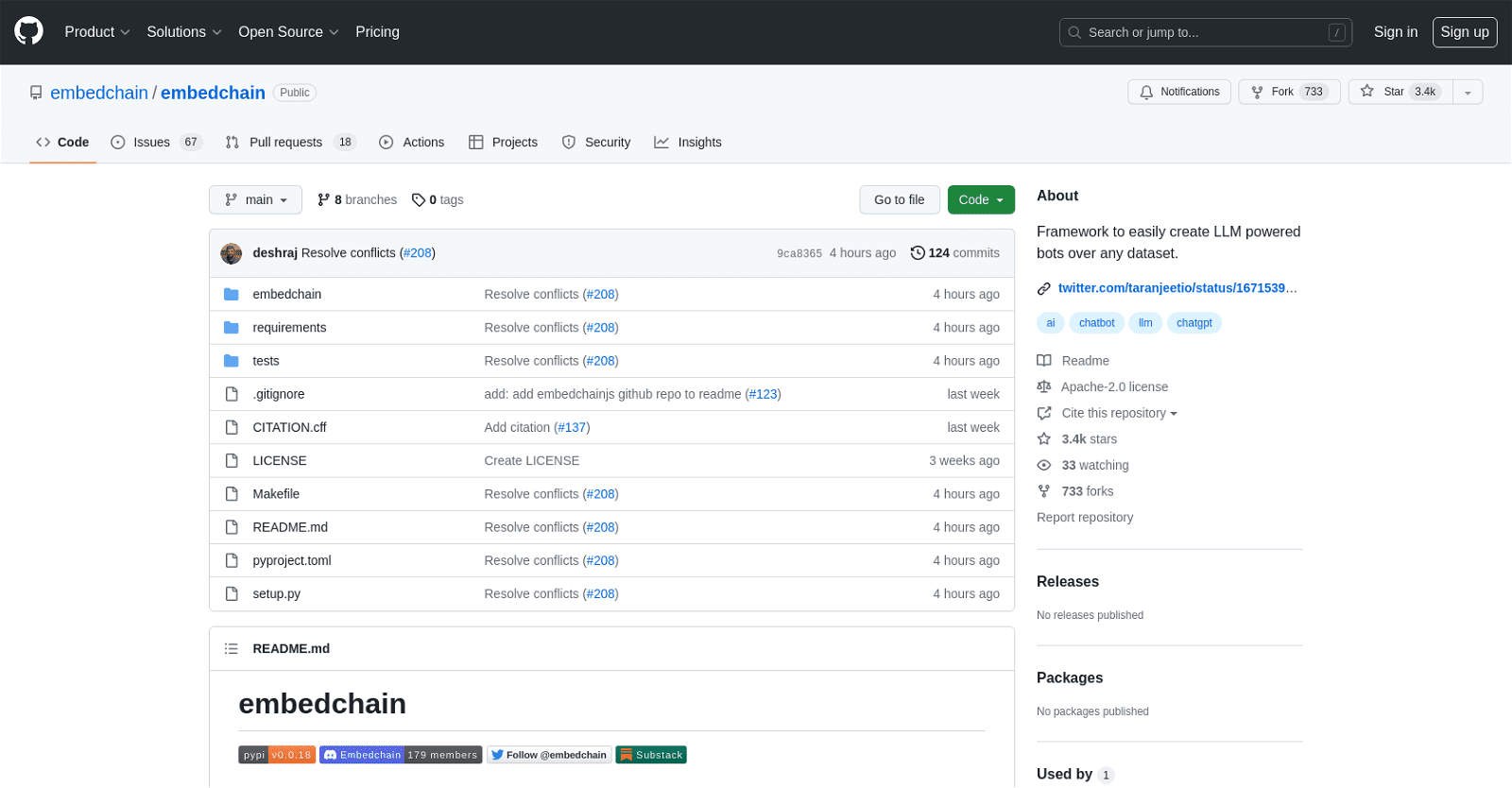

The “embedchain/embedchain” tool on GitHub stands as a versatile framework empowering users to effortlessly develop bots fueled by Large Language Model (LLM) technology across diverse datasets. It offers a suite of functionalities aimed at simplifying the creation and deployment of these bots, enhancing efficiency and productivity in development workflows.

Hosted on GitHub, the tool leverages the platform’s rich array of features, including workflow automation, package management, vulnerability detection and correction, instant development environments, AI-powered code assistance, code review management, and collaborative capabilities beyond coding. Users can explore comprehensive documentation, GitHub Skills, and the GitHub Blog for additional resources and insights.

Designed to cater to a wide audience including enterprises, teams, startups, and educational institutions, “embedchain/embedchain” supports Continuous Integration/Continuous Deployment (CI/CD) and Automation, as well as DevOps and DevSecOps solutions. Users can access case studies, customer stories, and Open Source resources to further augment their development journey.

With a growing popularity evidenced by 3.4k stars and 733 forks on GitHub, the tool is licensed under the Apache-2.0 license, granting users the freedom to interact with the codebase, raise issues, submit pull requests, and utilize GitHub’s extensive suite of actions and security features.

In summary, “embedchain/embedchain” serves as a robust framework streamlining the creation of LLM-powered bots across diverse datasets, while seamlessly integrating with GitHub’s ecosystem to enhance development and deployment workflows.

More details about Embedstore

How do I interact with the code of Embedchain on GitHub?

By making use of the features available on GitHub, users can engage with the code of Embedchain. They include the ability to fork the repository and make changes to their own copy, submit pull requests suggesting modifications to the code, report bugs or feature requests, and clone the repository to their local system.

How does Embedchain use Large Language Model technology?

Embedchain powers its bots with Large Language Model (LLM) technology. LLMs are machine learning models that employ textual sequences to forecast the subsequent element—in this example, the user’s query response—in the sequence. By abstracting the entire process of loading a dataset, chunking it, constructing embeddings, and storing it in a vector database, Embedchain makes it easier for users to design and use these bots.

How do I install and use Embedchain?

The ‘pip install embedchain’ command is the first step towards installing Embedchain using Python’s package manager. Following installation, import an appropriate ‘App’, ‘OpenSourceApp’, or ‘PersonApp’ instance from Embedchain. To find an answer in a dataset, use the ‘.query’ or ‘.chat’ methods after adding the dataset using the ‘.add’ or ‘.add_local’ functions. Setting an OpenAI API key in your environment variables is required if you’re utilizing the ‘App’ or ‘PersonApp’ instances.

How do the CI/CD and Automation features work with Embedchain?

Development and deployment procedures can be streamlined by integrating Embedchain with CI/CD and Automation capabilities. Developers can automate the steps of app development, such as integration, testing, delivery, and deployment, with the help of CI/CD, or continuous integration/continuous deployment. The architecture provided by Embedchain enables the construction of bots in an automated setting, permits frequent upgrades and modifications, and guarantees quick deployment.