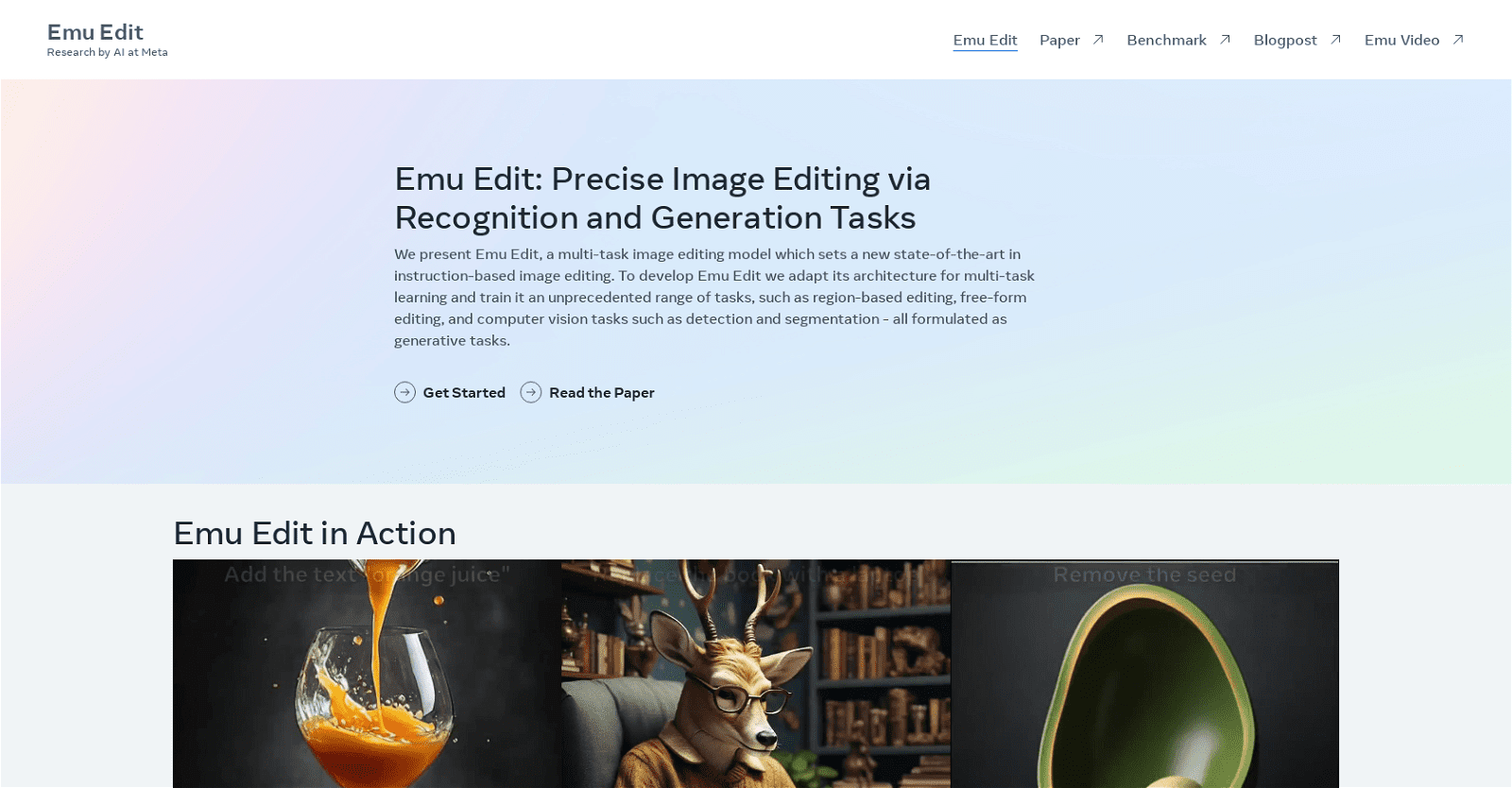

Emu Edit, developed by AI at Meta, represents a pioneering leap in instruction-based image editing, setting new benchmarks in precision and performance. Built upon a multi-task learning architecture, it excels across a spectrum of editing tasks, including region-based editing, free-form editing, detection, and segmentation, all framed as generative tasks.

A standout feature of Emu Edit is its adept handling of diverse image editing tasks, achieved through the utilization of learned task embeddings. These embeddings serve as guiding principles, steering the generation process toward accurate execution of editing instructions. This approach significantly enhances the model’s accuracy and efficacy in fulfilling editing directives.

Furthermore, Emu Edit showcases remarkable few-shot learning capabilities, swiftly adapting to novel tasks through task inversion. By strategically updating task embeddings while maintaining fixed model weights, it demonstrates adaptability in scenarios with limited labeled data or computational constraints.

To facilitate robust evaluation of instruction-based image editing models, Emu Edit offers a benchmark dataset encompassing seven distinct editing tasks, including background alteration, style alteration, and object addition/removal. This dataset enables fair comparison of Emu Edit’s output against industry standards, facilitating informed assessments of its performance.

In summary, Emu Edit stands as a cutting-edge tool tailored for professionals seeking unparalleled precision and versatility in image editing. Leveraging advanced AI techniques and multi-task learning, it empowers users to achieve exceptional results with ease and efficiency.