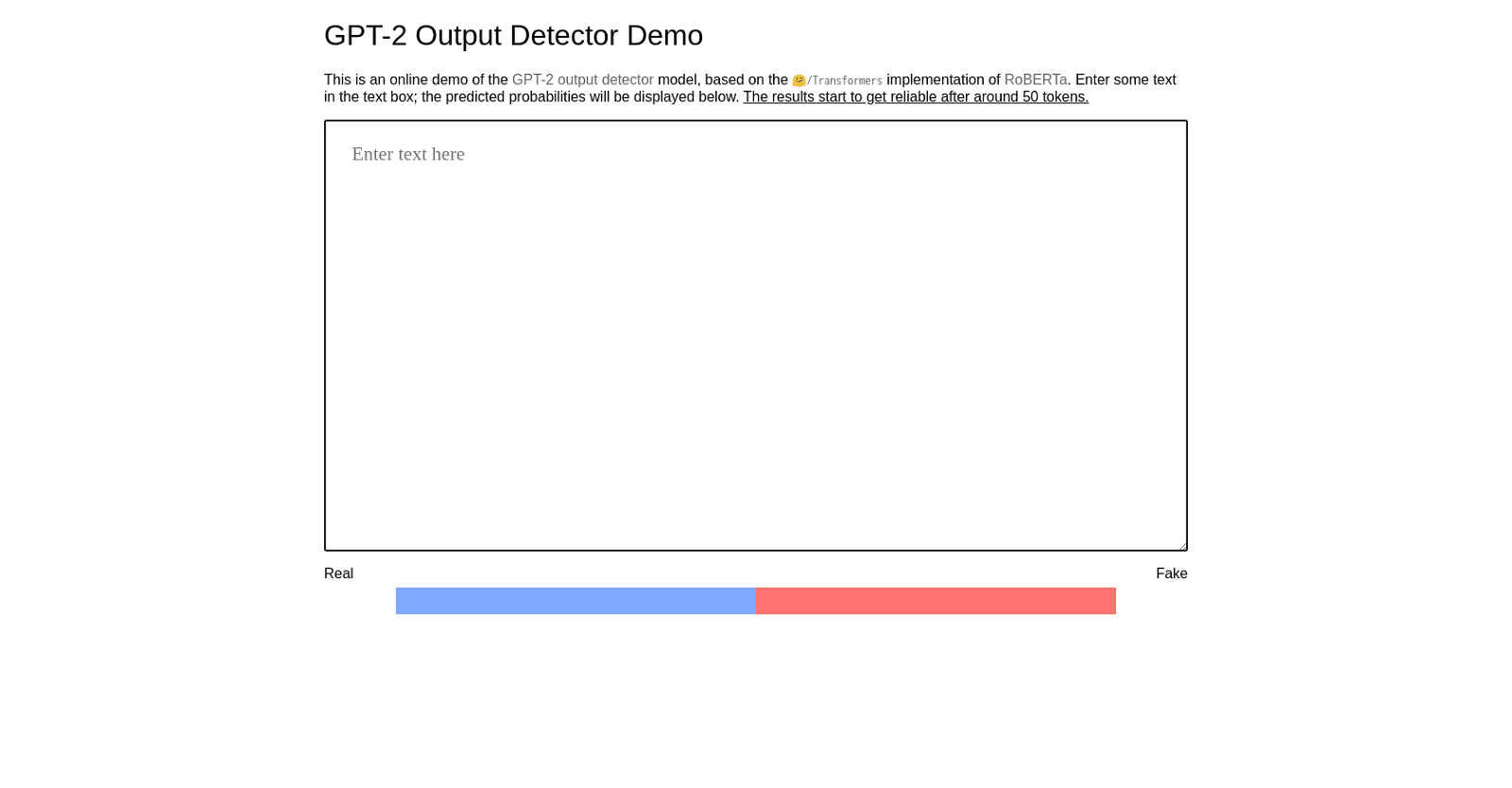

The GPT-2 Output Detector is an online demo showcasing a machine learning model designed to assess the authenticity of text inputs. It’s built on the RoBERTa model from HuggingFace and OpenAI, utilizing the 🤗/Transformers library.

Users can input text into a provided text box and receive a prediction regarding the authenticity of the text. Probabilities indicating the likelihood of the text being genuine or fake are displayed below the input box. For optimal accuracy, the model requires a minimum of 50 tokens to analyze.

This tool serves as a valuable resource for quickly and reliably identifying potentially fraudulent or deceptive text inputs. It finds applications in various scenarios, from verifying the credibility of news articles to filtering out spam content.

More details about GPT-2 Output Detector

What libraries does GPT-2 Output Detector use in its implementation?

The GPT-2 Output Detector utilizes the Transformers library for its implementation.

How effective is GPT-2 Output Detector in detecting fake news articles?

While the specific effectiveness is not explicitly detailed, the GPT-2 Output Detector has the capability to identify the authenticity of news articles, implying a certain level of effectiveness in discerning fake news articles.

What technology is GPT-2 Output Detector based on?

The GPT-2 Output Detector relies on the RoBERTa model, a technology collaboratively developed by HuggingFace and OpenAI.

What’s the minimum token requirement for reliable results from GPT-2 Output Detector?

To ensure reliable results from the GPT-2 Output Detector, it’s recommended to input a minimum of 50 tokens.