HateHoundAPI is an AI-powered toxic content detector, offering a swift and efficient solution for identifying and filtering out toxic comments in web applications. Its advanced AI technology enables lightning-fast detection, replacing slow and costly moderation processes traditionally used.

As an open-source tool, HateHoundAPI provides developers and organizations with the flexibility to tailor its use to their specific needs, thereby enhancing content control measures across various platforms. Designed with user-friendliness in mind, developers can seamlessly connect their GitHub accounts to access the tool’s API and begin utilizing its capabilities.

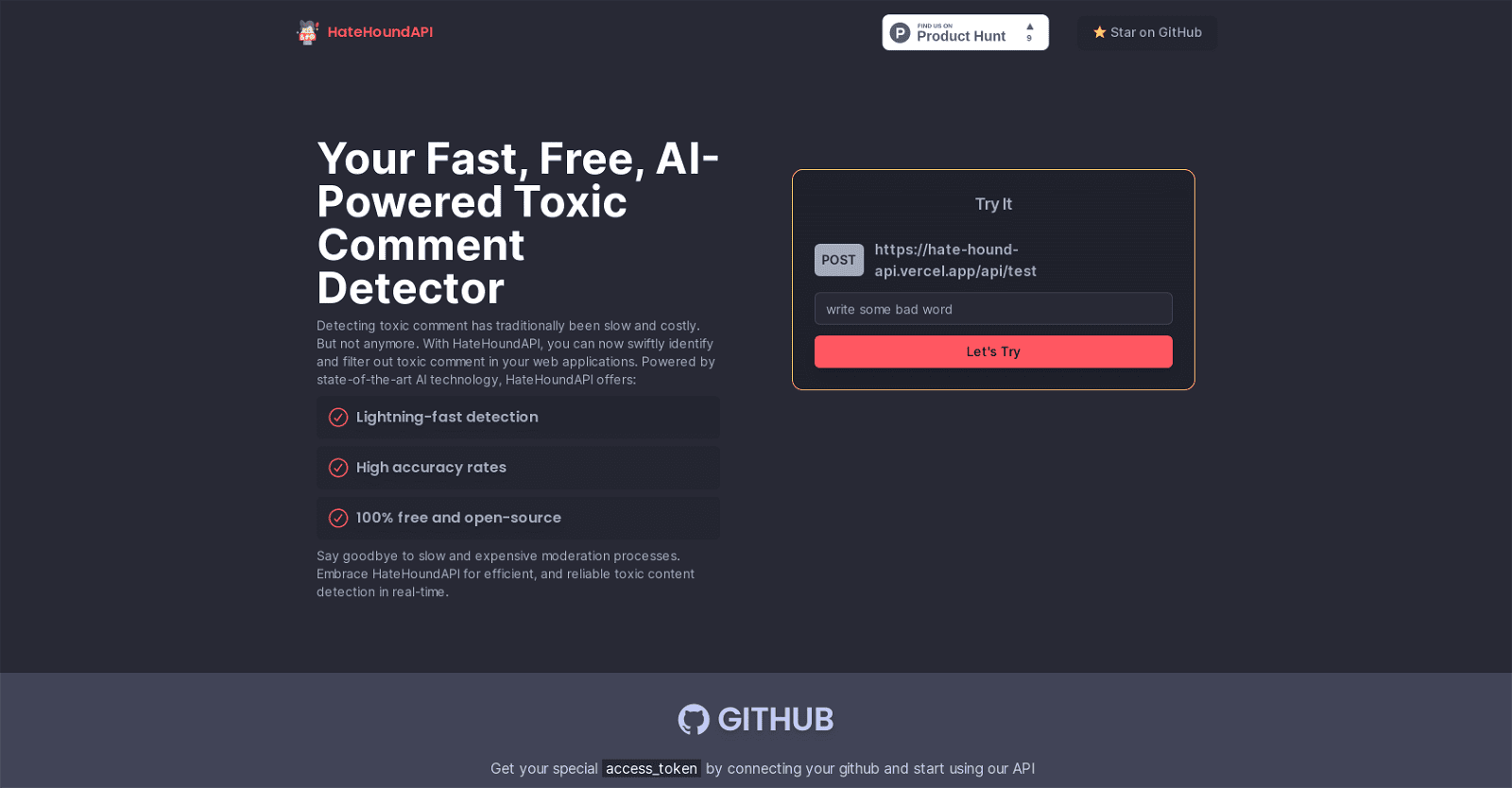

Using HateHoundAPI involves sending a post request with a comment and access token to receive a prediction on the comment’s toxicity level. This process facilitates real-time moderation, promoting safer and more regulated online conversations.

In terms of functionality, HateHoundAPI primarily focuses on identifying and filtering toxic content in web applications, ensuring a safer online environment. By swiftly detecting and removing harmful content, it contributes to enhancing online safety and the overall quality of online interactions.

To obtain access to HateHoundAPI, users can connect their GitHub accounts, following the instructions provided on the website. However, if users encounter any issues while using the tool, specific troubleshooting details may not be available on the website. Nevertheless, being an open-source tool, users can seek assistance from the broader community or refer to the API documentation for possible solutions.