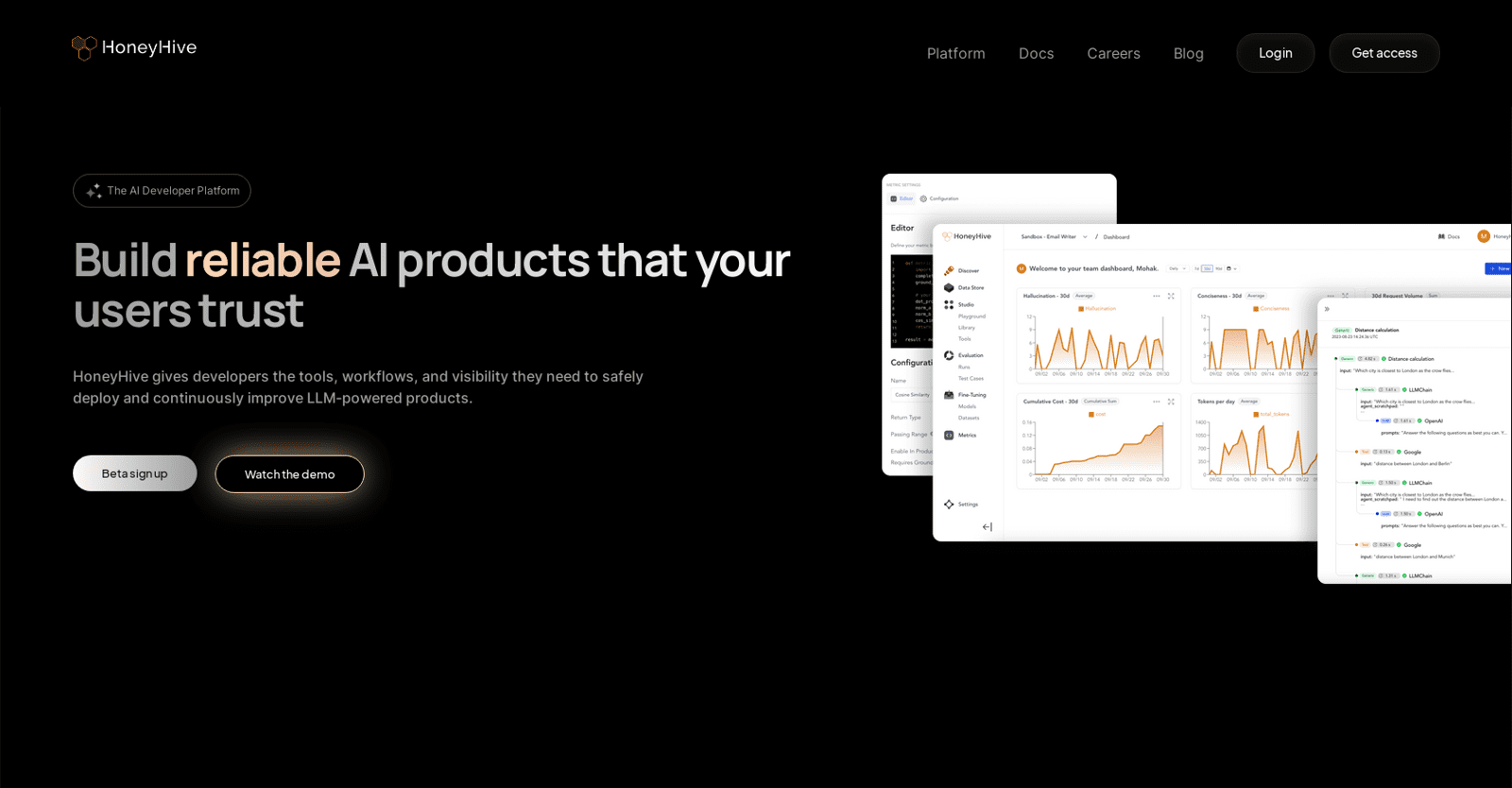

HoneyHive emerges as an indispensable AI developer platform, empowering teams to safely deploy and continuously enhance Language and Learning Models (LLMs) in production environments.

With a comprehensive suite of functionalities compatible with any model, framework, or environment, HoneyHive facilitates mission-critical monitoring and evaluation, ensuring the quality and performance of LLM agents. Developers can confidently deploy LLM-powered products, leveraging evaluation test suites for offline assessment and monitoring capabilities for observability and analytics.

The platform fosters collaborative prompt engineering with project managers and domain experts within a version-controlled workspace. Additionally, HoneyHive simplifies debugging of complex chains, agents, and RAG pipelines, employing AI-assisted root cause analysis.

Providing evaluation metrics, guardrails, a model registry, and version management system, HoneyHive empowers data scientists to track experiments and analyze performance, offering self-serve data and insights to application teams.

HoneyHive seamlessly integrates with any LLM stack, supporting diverse models, frameworks, and external plugins. Its pipeline-centric approach is tailored for intricate chains, agents, and retrieval pipelines, while its non-intrusive SDK ensures requests bypass their servers.

With a steadfast commitment to enterprise-grade security and scalability, HoneyHive implements end-to-end encryption, role-based access controls, and robust data privacy measures. Users can opt for deployment on the HoneyHive Cloud or their own Virtual Private Cloud (VPC), ensuring secure data ownership.

Furthermore, dedicated customer success managers (CSMs) and 24/7 founder-led support are available, assisting users throughout their AI development journey. HoneyHive stands as a trusted partner, empowering teams to navigate the complexities of AI development with confidence and ease.