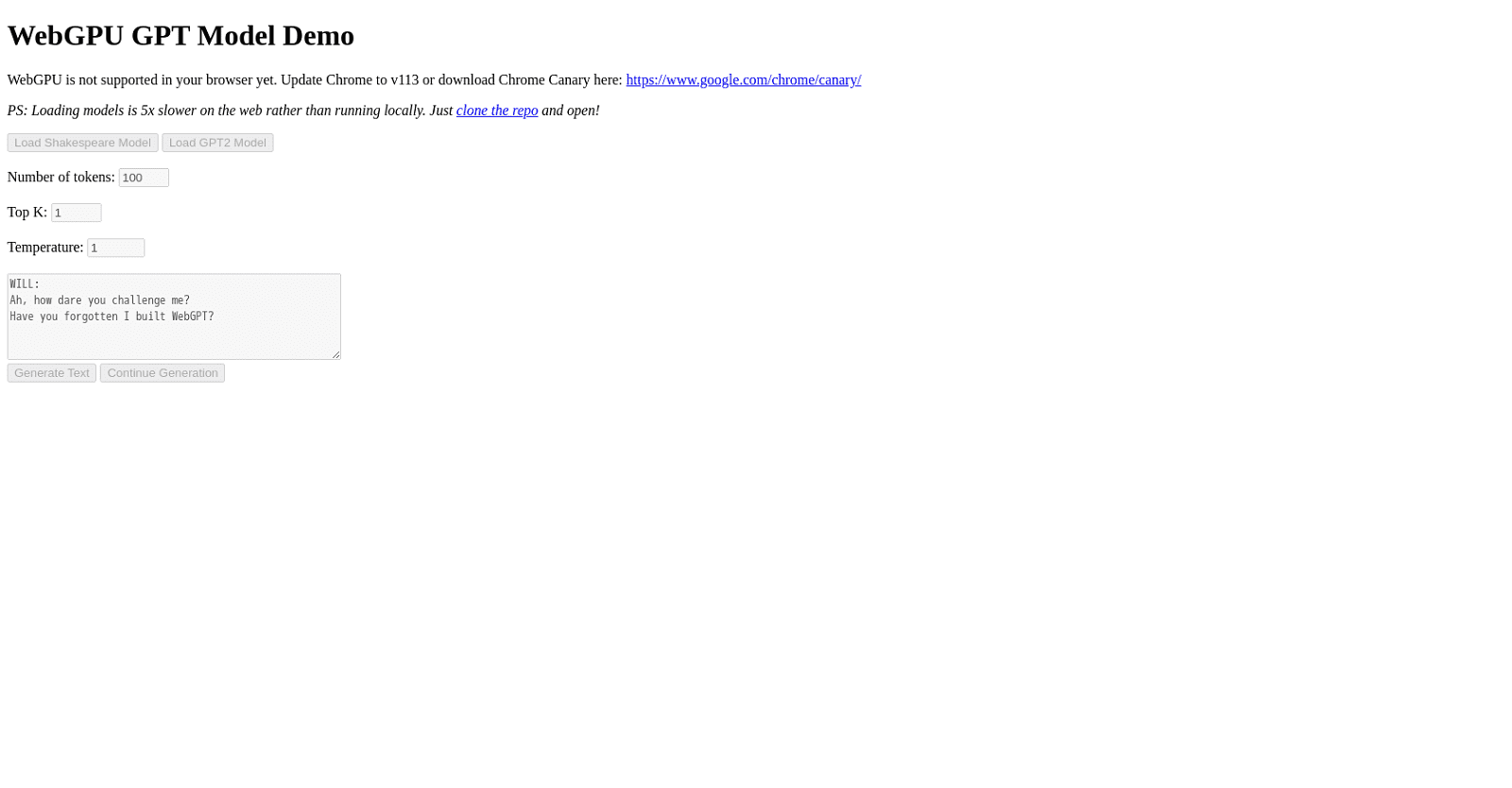

The WebGPU GPT Model Demo stands as an innovative web-based tool, leveraging WebGPU technology to showcase the text generation capabilities of the GPT model. For seamless functionality, users are advised to access the tool via an up-to-date version of Google Chrome or opt for Chrome Canary to support WebGPU.

Upon accessing the tool, users are presented with the option to load models such as the Shakespeare Model or GPT2 Model, accompanied by customizable settings including token count, top K, and temperature. Additionally, users can input prompts or starting sentences for the model to generate text from, with the option to continue generated text for further development.

It’s worth noting that model loading may entail longer processing times compared to local runs. As a workaround, users are encouraged to clone the repository and run the models locally for expedited processing.

In summary, the WebGPU GPT Model Demo serves as a valuable resource for developers and enthusiasts keen on exploring and experimenting with the GPT model’s text generation capabilities. However, optimal performance is contingent upon browser compatibility, necessitating an updated version of Google Chrome or Chrome Canary for an enhanced user experience.

More details about Kmeans

Why does loading models take longer in WebGPU GPT Model Demo?

The WebGPU GPT Model Demo loads models more slowly since it retrieves them from the internet. If you were to access them from local storage instead than the internet, this transfer would take longer.

Can the generated text be continued for further development?

Indeed, the generated text in the WebGPU GPT Model Demo can be developed further with user guidance.

Who is the WebGPU GPT Model Demo intended for?

The primary audience for the WebGPU GPT Model Demo is developers and hobbyists who want to investigate and play around with the text generation features of the GPT model.

What is the temperature setting on the WebGPU GPT Model Demo?

The ‘temperature’ variable in the WebGPU GPT Model Demo regulates how randomly text is generated. More randomness is produced by greater temperatures, and vice versa.