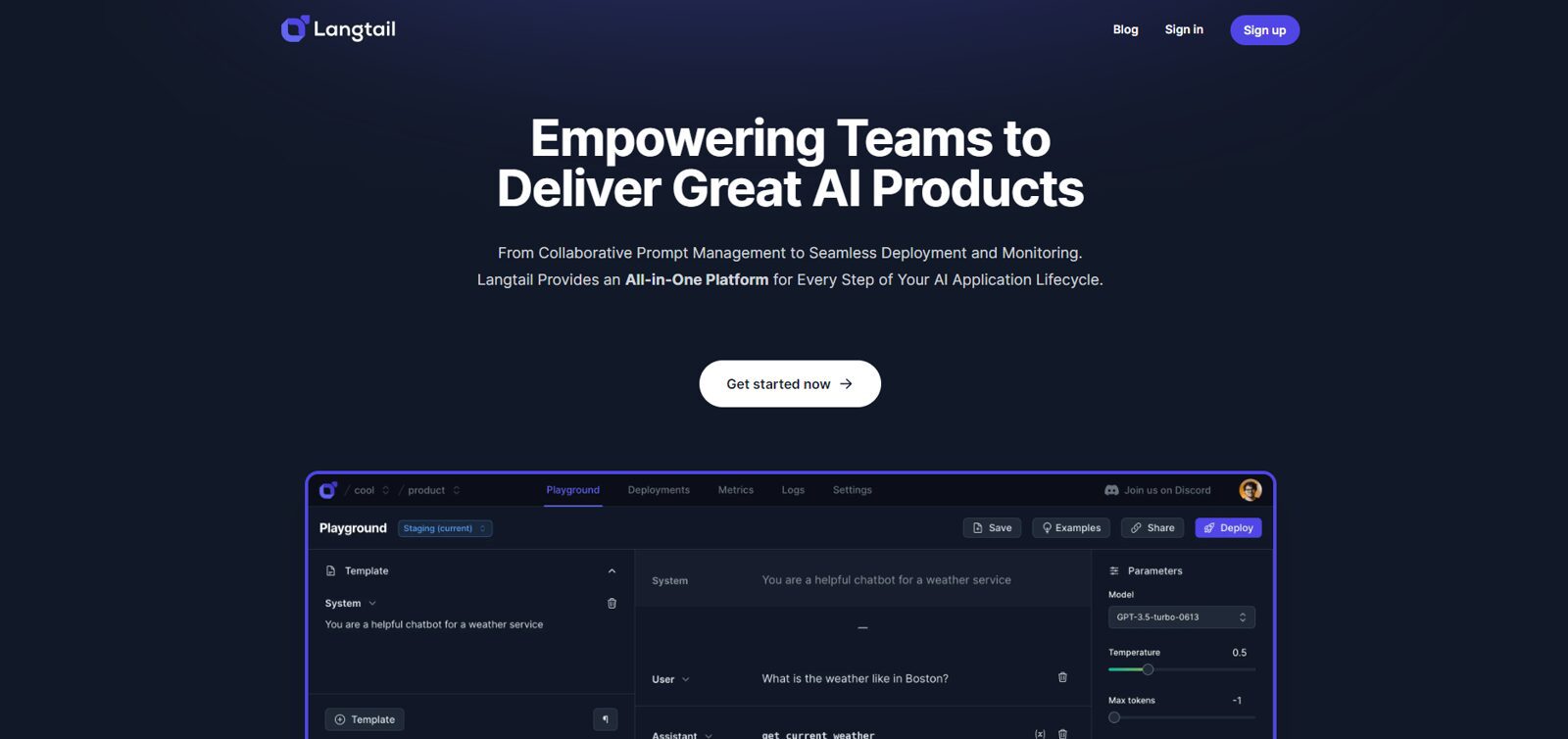

Introducing LangTale, a cutting-edge platform designed to streamline the management of Large Language Model (LLM) prompts, empowering teams to collaborate more efficiently and gain deeper insights into their AI’s operations.

LangTale offers a suite of features crafted to simplify the complexity of managing LLM prompts, making it accessible to both technical and non-technical team members alike. From prompt integration for easy adoption to robust analytics and reporting functionalities, LangTale provides a comprehensive solution for prompt management.

With LangTale, users can seamlessly collaborate, fine-tune prompts, track versions, conduct tests, maintain logs, configure environments, and receive real-time alerts, all within a unified interface. The platform also boasts effortless integration and API endpoints, enabling smooth incorporation into existing systems and applications, with each prompt deployable as an independent API endpoint.

Facilitating efficient testing and implementation, LangTale allows users to set up distinct environments for each prompt. Equipped with rapid debugging and testing tools, teams can swiftly pinpoint and resolve issues, ensuring peak performance at all times.

Additionally, LangTale features dynamic LLM provider switching, enabling seamless transitions between providers in the event of disruptions or latency spikes, guaranteeing uninterrupted application functionality. Tailored for developers, the platform offers advanced capabilities such as rate limiting, continuous integration for LLMs, and intelligent LLM provider switching.

Currently in development, LangTale is set to launch a private beta before its public release. Geared towards simplifying LLM prompt management and enriching the experience for both developers and non-technical users, LangTale is poised to revolutionize the way teams interact with and optimize their AI models.

More details about Langtail

What features does LangTale offer to simplify LLM prompt management?

Features like quick integration for non-technical team members, tracking expenses and monitoring LLM performance, latency, and more are provided by LangTale. With each new version of the prompt, you can effortlessly roll back changes, keep thorough logs of your API interactions, monitor LLM outputs, and use consumption and spending limitations to properly manage your resources. LangTale also offers dynamic LLM provider switching, environment settings for every prompt, and debugging and testing tools.

What kind of analytics and reporting capabilities does LangTale provide?

LangTale offers reporting and analytics tools to track Large Language Model performance. It helps customers make decisions based on analytics by offering options to track expenses, latency, and more.

How is LangTale tailored for developers?

By incorporating rate limitation, efficient LLM provider switching, and continuous integration for LLMs, LangTale is designed with developers in mind. It makes LLM prompt management easier by integrating with current systems with ease, creating distinct environments for each prompt, testing and debugging quickly, and dynamically switching between LLM providers.

How does LangTale streamline the management of LLM prompts?

By offering a centralized framework where people can interact, manage versions, modify questions, run tests, keep logs, and create environments, LangTale simplifies the administration of LLM prompts. In addition to analytics and reports, thorough change management, intelligent resource management, quick debugging, testing tools, and environment settings for quick testing and deployment, it facilitates the quick integration process for non-tech team members.