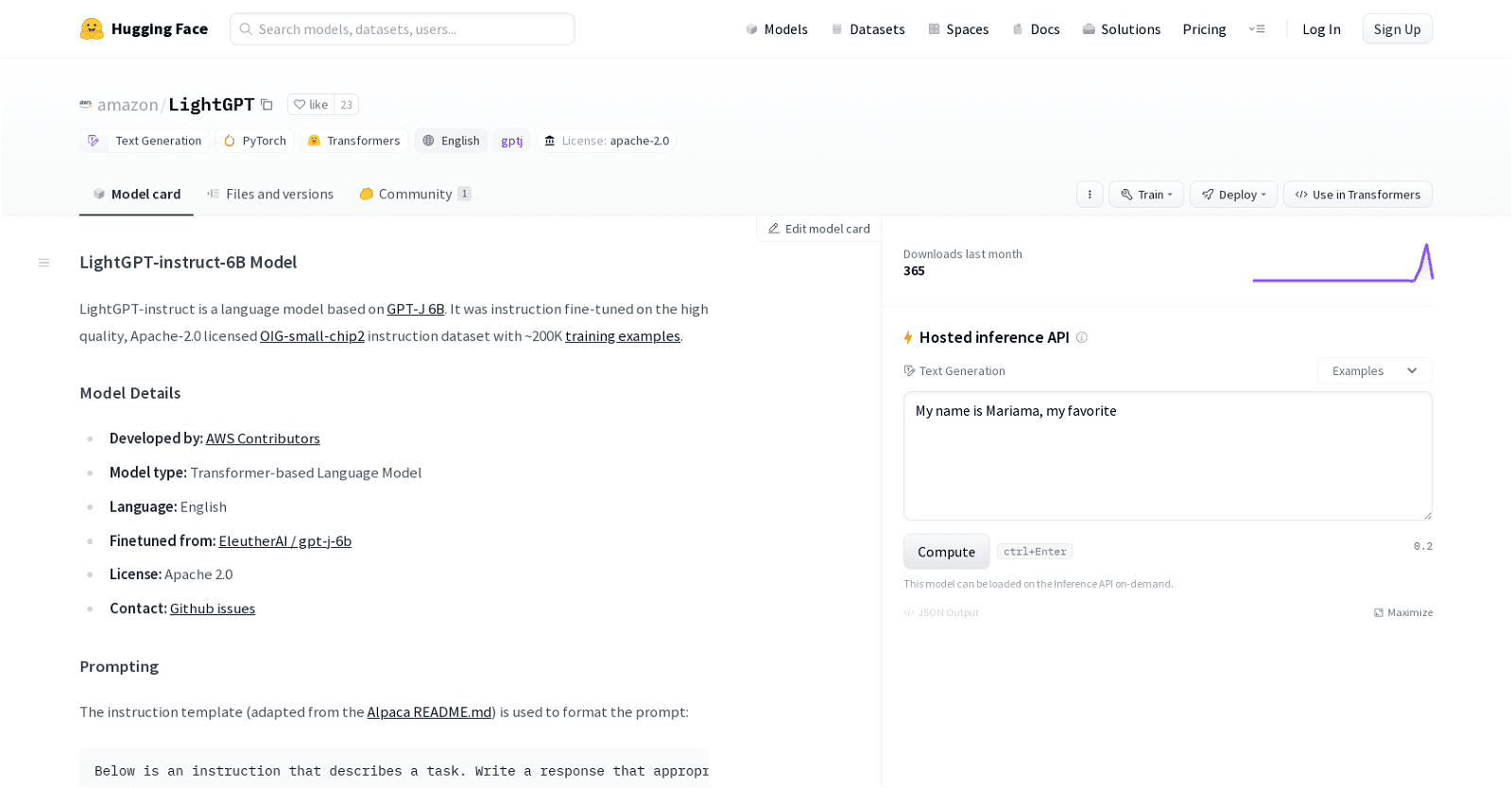

LightGPT-instruct-6B, developed by AWS Contributors and based on GPT-J 6B, is a language model fine-tuned on the OIG-small-chip2 instruction dataset, comprising approximately 200K training examples. This Transformer-based model generates text in response to prompts following a standardized format, with completion indicated by ### Response:n.

Primarily designed for English conversations, the LightGPT-instruct-6B model is Apache 2.0 licensed. Deployment on Amazon SageMaker is facilitated, with documentation providing a step-by-step guide for the process. Model evaluation involves metrics such as LAMBADA PPL, LAMBADA ACC, WINOGRANDE, HELLASWAG, PIQA, and GPT-J.

However, users are cautioned about the model’s limitations. These include its occasional failure to accurately follow lengthy instructions, providing incorrect responses to mathematical and reasoning queries, and occasionally generating false or misleading outputs.

The model operates solely based on the provided prompt, lacking contextual understanding. Thus, while it excels at generating responses for various conversational prompts, including those requiring specific instructions, users should remain mindful of its constraints when utilizing it.

More details about LightGPT

How many training examples were used to fine-tune LightGPT-instruct-6B?

Around 200K training examples were used to fine-tune LightGPT-instruct-6B.

How can LightGPT-instruct-6B be deployed on Amazon SageMaker?

To deploy LightGPT-instruct-6B on Amazon SageMaker, the process entails utilizing the SageMaker DJLModel class to define the model, where configurations such as dtype, task, and the number of GPUs for partitioning are specified. Additionally, an AWS IAM role is required for authorization purposes. Subsequently, the model is deployed by invoking the deploy method.

Why might LightGPT-instruct-6B generate incorrect answers to math and reasoning questions?

While the website doesn’t explicitly state the reason, it’s commonly known that language models, including this one, may encounter challenges with the intricacies and nuances of mathematical concepts and certain types of reasoning. These limitations likely arise from the model’s lack of deep, logical reasoning capabilities necessary for accurately addressing mathematical problems and more complex forms of reasoning.

What is the instruction template used by LightGPT-instruct-6B?

The instruction template employed by LightGPT-instruct-6B consists of an instruction block and a response block, both of which commence with ### Instruction: and ### Response: respectively. The model initiates the response generation process upon encountering the input prompt ending with ### Response:n.