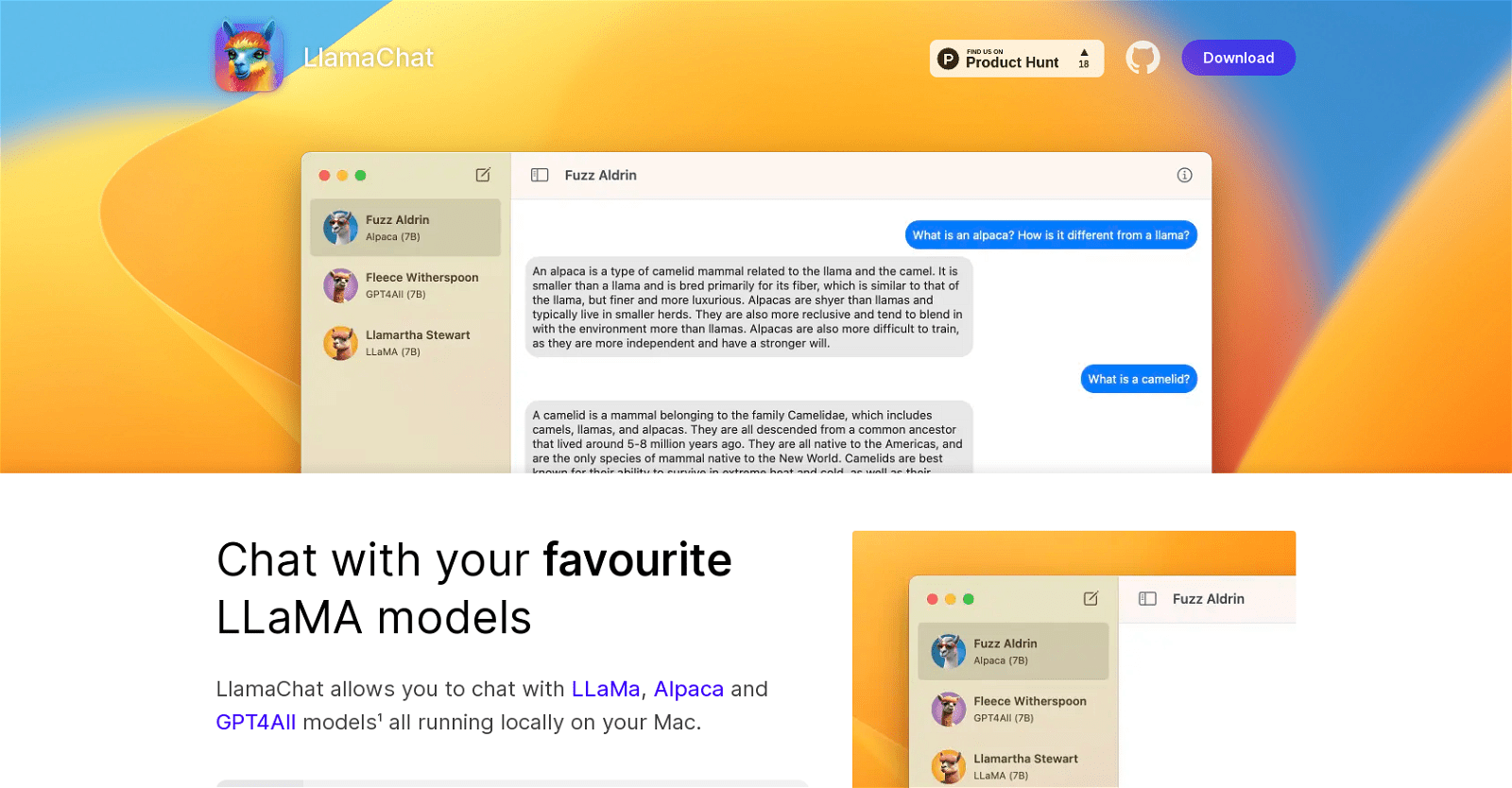

LlamaChat is an innovative AI chat tool that allows users to interact with various models, including LLaMa, Alpaca, and GPT4All, potentially accessible on Mac computers. Among these models, the Alpaca model, developed by Stanford and trained on 52,000 instruction-following demonstrations from OpenAI’s Text-Davinci-003, stands out.

The tool facilitates the importation of raw published PyTorch model checkpoints or pre-converted .ggml model files. Leveraging open-source libraries like llama.cpp and llama.swift, LlamaChat is fully open-source and available for free.

It’s worth noting that LlamaChat doesn’t include model files by default, putting the onus on users to acquire and integrate the appropriate models while adhering to providers’ terms and conditions.

LlamaChat is not affiliated with Meta Platforms, Inc., Leland Stanford Junior University, or Nomic AI, Inc. It offers a chatbot-like experience for users to interact with a variety of models.

The tool simplifies model conversion and interaction, granting access to popular models like Alpaca, LLaMa, GPT4All, and the upcoming Vicuna model. Compatible with both Intel processors and Apple Silicon, LlamaChat requires Mac OS 13 or later for operation.

In essence, LlamaChat emerges as a versatile open-source AI chat tool, catering to enthusiasts and researchers alike, providing a convenient platform to engage with diverse models.

Sure, here are the FAQs for LlamaChat:

Is LlamaChat compatible with Apple Silicon?

Yes, LlamaChat is optimized to run smoothly on Apple Silicon processors.

What are the main libraries behind LlamaChat?

LlamaChat relies on two primary open-source libraries: llama.cpp and llama.swift.

Can I use LlamaChat on my Mac?

Absolutely, LlamaChat is designed specifically for Mac users and is fully compatible with Mac OS 13 and above.

Is LlamaChat an open-source platform?

Yes, LlamaChat is entirely open-source, leveraging the power of open-source libraries like llama.cpp and llama.swift.