Meta AI-Llama 3 is a cutting-edge AI tool designed to empower users in developing advanced AI technologies. Available in both 8B and 70B pretrained and instruction-tuned versions, it boasts a wide array of capabilities for various applications. Its extensive pretraining equips the model with a deep contextual understanding, making it particularly adept at tasks that require precision and detailed comprehension.

The instruction-tuned variants provide a structured and user-friendly process, enhancing performance and user experience. By combining exceptional pretraining with tailored instruction tuning, Meta Llama 3 pushes the boundaries of AI technology, offering adaptability and versatility across numerous industries, enabling users to create sophisticated AI models that tackle complex tasks efficiently and accurately.

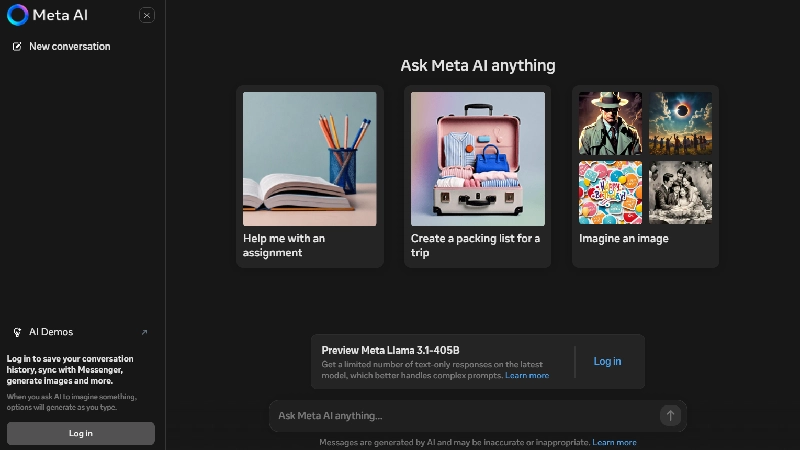

Recent News About Meta AI

Meta has launched its AI chatbot, Meta AI, in six new countries: Brazil, the U.K., the Philippines, Bolivia, Guatemala, and Paraguay. This expansion is part of a gradual rollout that will eventually include regions like the Middle East, making Meta AI available in 43 countries and over a dozen languages. The chatbot is accessible on Facebook, Instagram, WhatsApp, Messenger, and the Meta.ai website. Meta AI now supports languages such as Arabic, Indonesian, Thai, and Vietnamese.

Meta AI has nearly 500 million users globally, with India being its largest market due to WhatsApp’s popularity. Recent updates include new celebrity voices, lip-synced translations, and a generative AI-powered “Imagine” feature for creating photos through natural language prompts. Meta AI can also understand and edit your photos, enhancing user interaction and engagement across Meta’s platforms.

How to Use Meta AI-Llama 3

Meta AI-Llama 3 is a powerful AI language model designed to enhance user interactions and support diverse applications. Its advanced features make it accessible for developers and researchers alike.

- Access the Model: Find Llama 3 models on platforms like Hugging Face, Azure ML, or Amazon SageMaker.

- Set Up Your Environment: Install necessary libraries and dependencies, such as PyTorch and Hugging Face Transformers.

- Load the Model: Use provided APIs to load the Llama 3 model into your application.

- Fine-Tune the Model: Customize Llama 3 for your specific use case using tools like torchtune for efficient training.

- Integrate with Applications: Embed the model into chatbots, content generation tools, or other applications as needed.

- Test and Evaluate: Run tests to evaluate the model’s performance and make adjustments based on feedback.

- Deploy Your Application: Launch your application, ensuring to monitor its performance and user interactions for continuous improvement.

Features of Meta AI-Llama 3

Meta AI-Llama 3 is an advanced AI language model offering state-of-the-art performance, improved reasoning, and a wide range of capabilities for developers.

- Optimized Architecture: Decoder-only transformer design with a 128K token vocabulary for efficient language encoding.

- Extensive Training Data: Pretrained on over 15 trillion tokens from public sources, 7x larger than Llama 2 with 4x more code.

- Multilingual Support: Over 5% of the dataset covers 30+ languages, though English performance is superior.

- Improved Post-Training: Includes supervised fine-tuning, rejection sampling, and policy optimization for enhanced quality and decision-making.

- Competitive Performance: Outperforms competitors and older versions on benchmarks like MMLU and HumanEval.

- Scalable Pretraining: Uses detailed scaling laws to balance data mix and computational resources for optimal performance across use cases.

- Accessible Platforms: Available on major platforms with improved tokenizer efficiency and safety features for responsible deployment.

FAQs About Meta AI-Llama 3

How can Meta Llama 3 help in building future-oriented AI models?

Meta Llama 3 facilitates the development of future-oriented AI models by offering exceptional pretraining and instruction-tuning capabilities. Its adaptability across various applications and industries enables the creation of AI models capable of efficiently addressing complex tasks.

What is the difference between the 8B and 70B versions of Meta Llama 3?

The 8B and 70B versions of Meta Llama 3 represent the number of model parameters, with 8B indicating 8 billion parameters and 70B indicating 70 billion parameters. While the specifics of their differences are not provided, the 70B version is likely more suited for handling more complex tasks.

How does Meta Llama 3 improve AI efficiency?

Meta Llama 3 enhances AI efficiency through extensive pretraining and understandable instruction-tuning, optimizing AI performance. Its versatility across various tasks and industries further contributes to its efficiency.

What applications can Meta Llama 3 support?

Meta Llama 3 supports a wide range of applications, including AI development, complex problem-solving, AI education, and more, owing to its adaptability and pretraining capabilities. It is capable of addressing tasks across diverse industries, making it highly versatile.