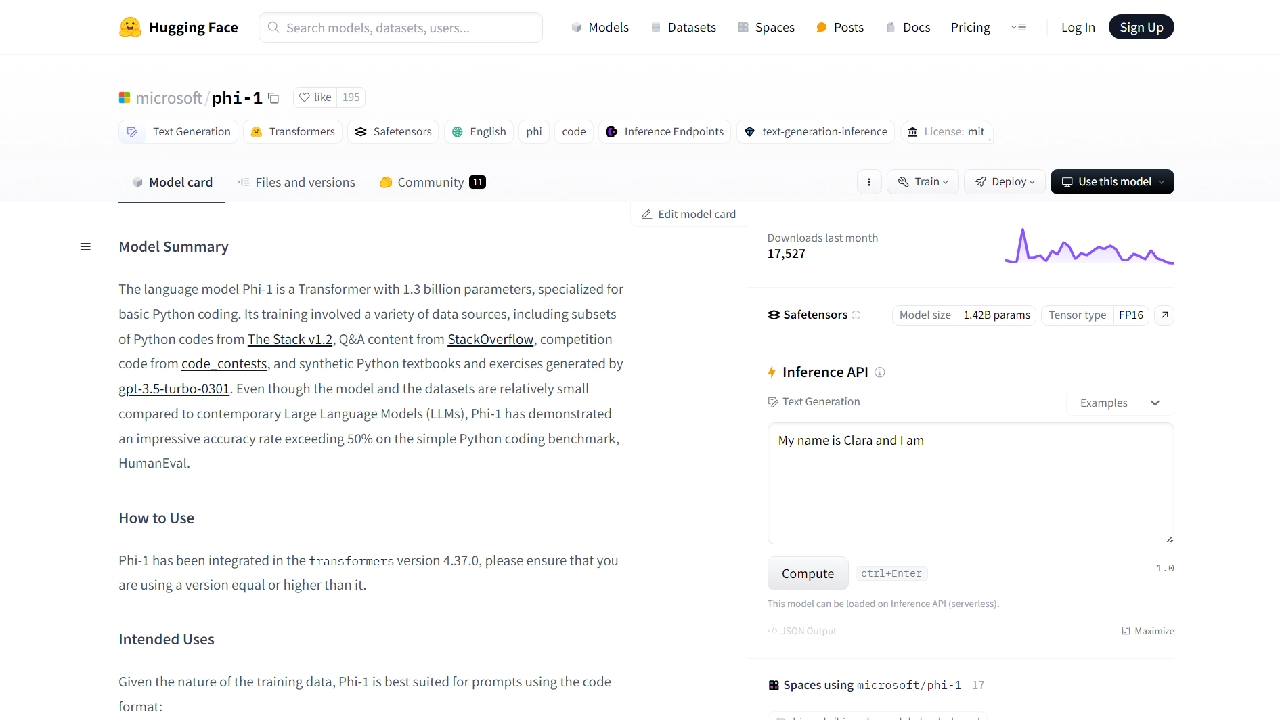

Phi-1 is a specialized Transformer language model designed for basic Python programming tasks, boasting 1.3 billion parameters. Trained on diverse data sources including Python code from Stack v1.2, StackOverflow Q&A, programming competitions, and synthetic Python textbooks, Phi-1 excels in generating Python code with over 50% accuracy on the HumanEval benchmark.

Ideal for prompts structured in Python code format, Phi-1 generates code following comments. However, its outputs should be viewed as starting points rather than definitive solutions. Users are advised to exercise caution when integrating Phi-1 into applications, as it has not undergone testing for production-level code and may replicate existing online code due to its training limitations.

Despite its capabilities, Phi-1 has constraints, such as being trained on a limited range of Python packages. These factors underscore the importance of judicious use and validation of its outputs in practical applications.