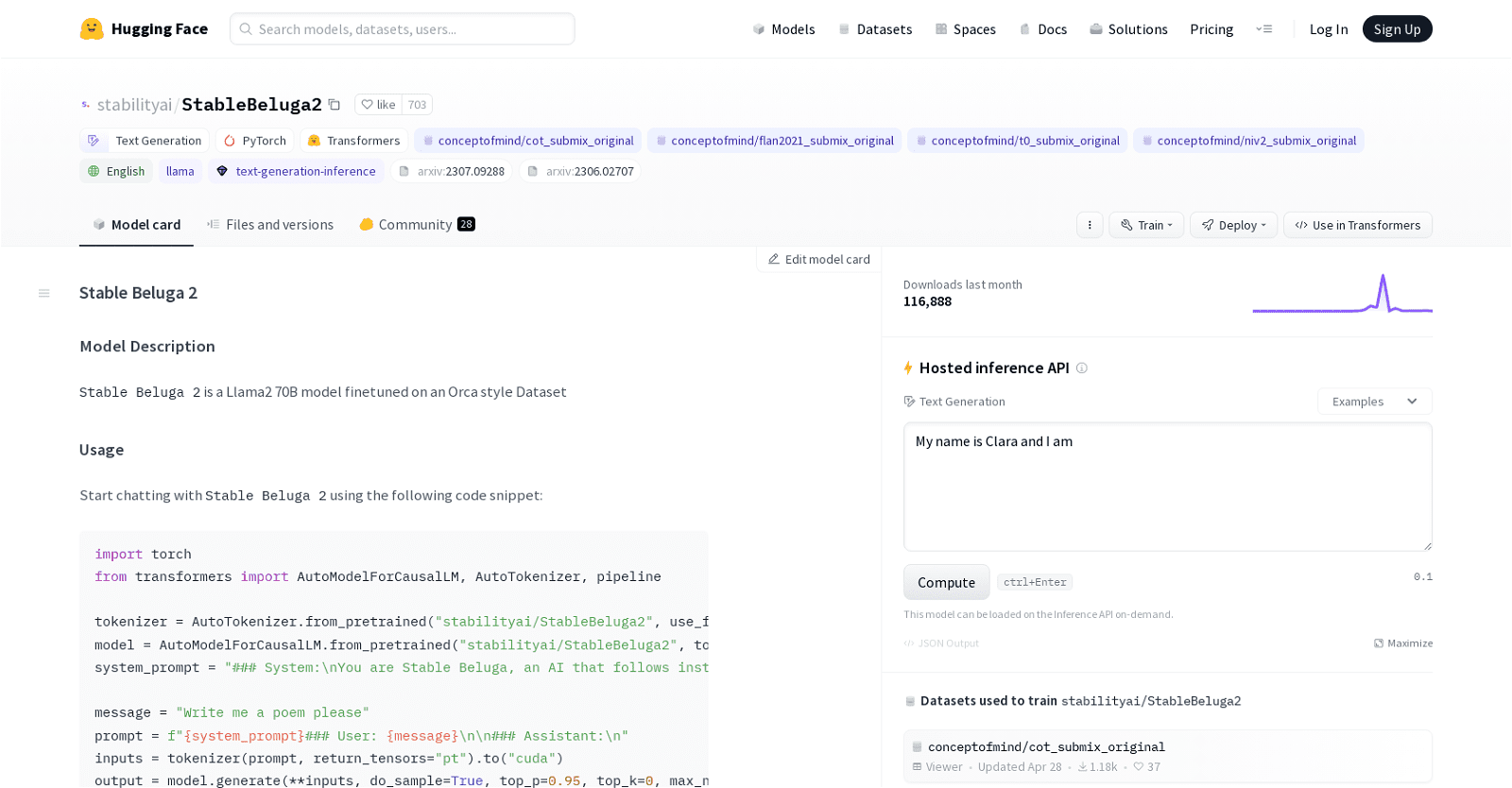

StableBeluga2 is an advanced auto-regressive language model developed by Stability AI, fine-tuned on the Llama2 70B dataset, and designed for generating text based on user prompts. This versatile model can be employed for various natural language processing tasks, including text generation and conversational AI. Developers can utilize StableBeluga2 by importing the necessary modules from the Transformers library and using the provided code snippet.

The model operates by taking a prompt as input and generating a corresponding response. The prompt format typically includes a system prompt, user prompt, and assistant output, allowing for a structured interaction. Additionally, StableBeluga2 supports customization through parameters like top-p and top-k, which control the diversity and relevance of the output.

Trained on an internal Orca-style dataset, StableBeluga2 employs mixed-precision (BF16) training and is optimized using the AdamW optimizer. Detailed specifications of the model include its type, primary language (English), and its implementation within the HuggingFace Transformers library.

Developers should be aware that, like other language models, StableBeluga2 may sometimes generate inaccurate, biased, or objectionable content. Therefore, it is crucial to conduct thorough safety testing and fine-tuning tailored to specific applications before deploying the model. For further information or support, developers can contact Stability AI via email. Additionally, the model provides citations for referencing and further research, ensuring transparency and facilitating academic and professional inquiry.

More details about StableBeluga2

What is the training process for StableBeluga2?

StableBeluga2 is trained via supervised fine-tuning on an internal Orca-style dataset. Its training procedure involves mixed-precision (BF16) training and optimization via AdamW.

What is the StableBeluga2 AI tool?

StableBeluga2 is an auto-regressive language model developed by Stability AI. It is designed to generate text based on user prompts and can be used for various natural language processing tasks like text generation and conversational AI.

What is the purpose of the top-p and top-k parameters in StableBeluga2?

In StableBeluga2, the top-p and top-k parameters control the output of the text generation process. The top-p parameter controls nucleus sampling which is a method of randomly sampling from the smallest possible set of tokens whose cumulative probability exceeds a certain threshold, whereas the top-k parameter controls the number of highest-probability tokens considered for sampling at each step of the generation process.

How do the ‘User’ and ‘Assistant’ components work in StableBeluga2?

In StableBeluga2, the ‘User’ and ‘Assistant’ components are parts of the prompt format. The ‘User’ component represents the prompt or message from the user, while the ‘Assistant’ component represents the output or response from StableBeluga2.