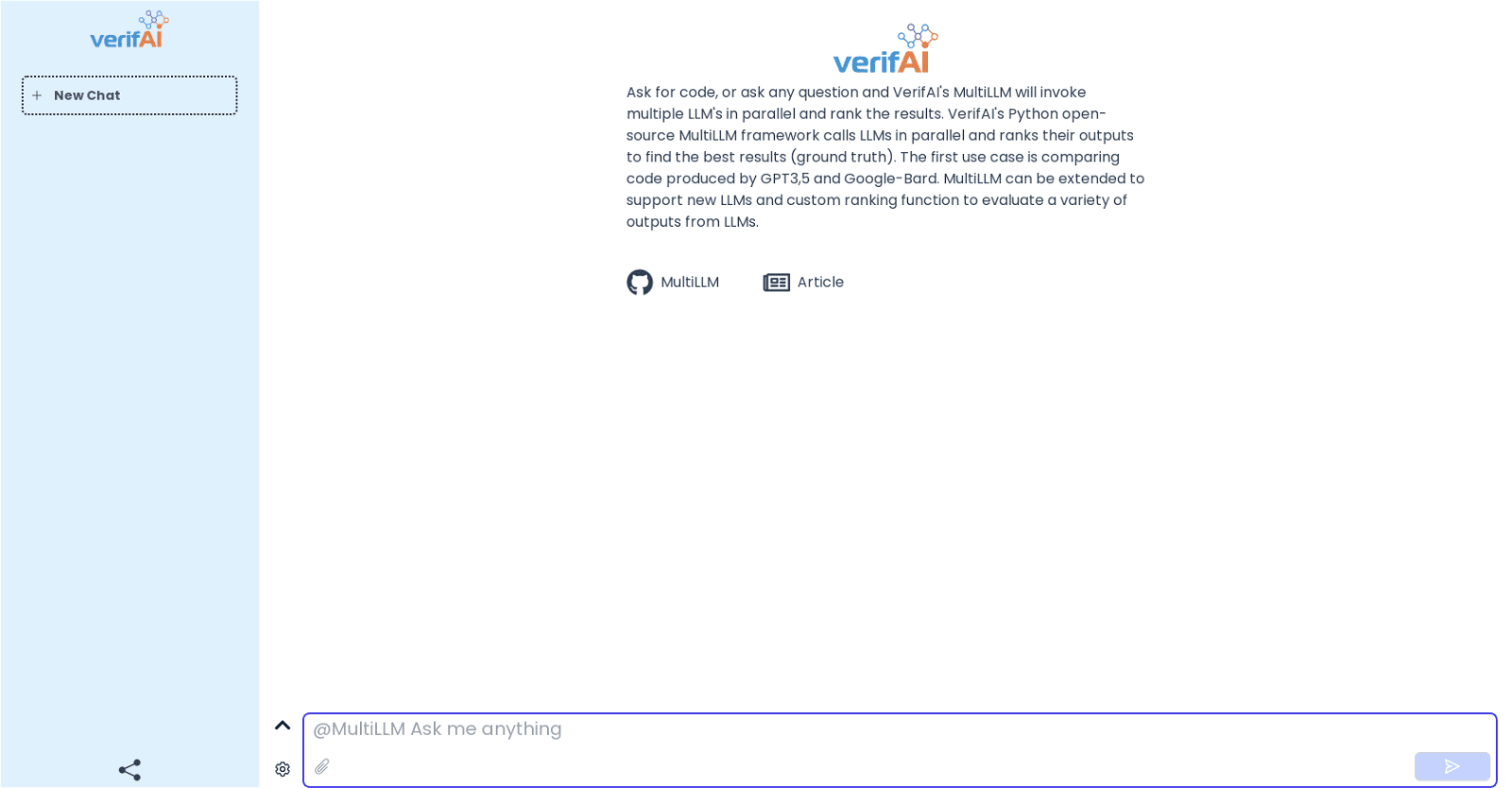

VerifAI’s MultiLLM is an open-source Python framework designed to harness the collective power of multiple Language Model Models (LLMs) simultaneously. By concurrently invoking multiple LLMs and ranking their outputs, MultiLLM strives to identify the most accurate results, often referred to as the ground truth.

Initially, MultiLLM is tailored for comparing code generated by prominent LLMs like GPT3, GPT5, and Google-Bard. However, the framework’s flexibility allows for seamless integration of new LLMs and customization of ranking functions to evaluate a wide array of outputs across different LLMs.

With its adaptable nature, VerifAI’s MultiLLM empowers users to obtain dependable results across various tasks. Whether soliciting code or seeking specific answers, MultiLLM harnesses multiple LLMs concurrently, ranking their responses to deliver the most precise and optimal outcomes.

It’s important to acknowledge that individual LLMs may occasionally provide erroneous information about people, places, or facts. By amalgamating outputs from multiple LLMs and comparing their results using VerifAI’s MultiLLM framework, users can mitigate the risk associated with solely relying on potentially inaccurate information.

For those eager to delve deeper, the MultiLLM framework is openly accessible on GitHub, with additional insights available in the associated VerifAI blog article.